TL;DR

- AI memory is transforming Customer Experience (CX) by evolving chatbots from transactional tools into proactive, context-aware partners that remember preferences, improve personalization, and drive retention. Modern solutions, like as a dual-layer system, blend immediate and persistent memory for seamless conversational flow, while industry leaders prioritize infrastructure, privacy, scalability, and relevance.

- Real-world challenges include semantic reasoning, smart forgetting, privacy compliance, and benchmarking long-term effectiveness.

Why memory matters

Memory bridges the gap between one-off queries and ongoing relationships, enabling AI to remember preferences, track progress, and deliver continuity without repetition. For CX, this means faster resolutions, tailored recommendations, and proactive support, reducing frustration while boosting trust and retention, much like how Invent's dual-layer system (per-chat for immediacy and global for persistence) creates natural conversations. Without it, assistants revert to generic responses, eroding the personalized feel customers crave.

In the era of next-gen chatbots, memory is the missing link between personalization and real customer loyalty. With great memory comes great responsibility: privacy, accuracy, and agility are just as vital as recall.

The evolution of AI memory in customer experience

The landscape of AI-driven customer experience has evolved rapidly. Early virtual assistants delivered basic, scripted responses, each interaction standing alone. As user expectations grew and technology matured, the need for AI systems to build long-term memory became clear. Today, customers expect assistants that “know” them, recall past conversations, and can pick up where they left off.

Future-ready CX needs intelligent memory

With advances in large language models (LLMs) and integration across platforms, businesses face new demands:

- Consistent, cross-channel engagement: Customers want continuity between web, app, and in-person support.

- Hyper-personalization: Every touchpoint is expected to reflect remembered preferences, issues, and context.

- Proactive problem solving: Assistants anticipate needs and automate next steps based on historical data.

- Trustworthy, compliant data handling: Privacy and control aren’t features, they’re expectations.

These imperatives are driving a shift from stateless, transactional bots to stateful, adaptive agents, making intelligent memory a CX game-changer.

Leaders in the space

Mem0 and Supermemory lead by tackling stateless LLMs head-on, enabling true learning and adaptation over time through robust memory layers. Unlike general platforms, they focus on infrastructure for reliability across interactions, drawing from practical balancing of utility and performance while pushing boundaries in agentic AI.

Memory Benchmarks overview

LongMemEval is a rigorous benchmark for evaluating long-term memory in AI chat assistants, featuring 500 questions within extended user-assistant chat histories, up to 115k+ tokens in its "small" version (LongMemEval_s).

It tests five key capabilities:

- Information extraction from single sessions (user facts, assistant responses, preferences)

- Multi-session reasoning by synthesizing scattered details

- Knowledge updates to handle contradictions or revisions

- Temporal reasoning for time sequences and relative dates

- Abstention on unanswerable queries

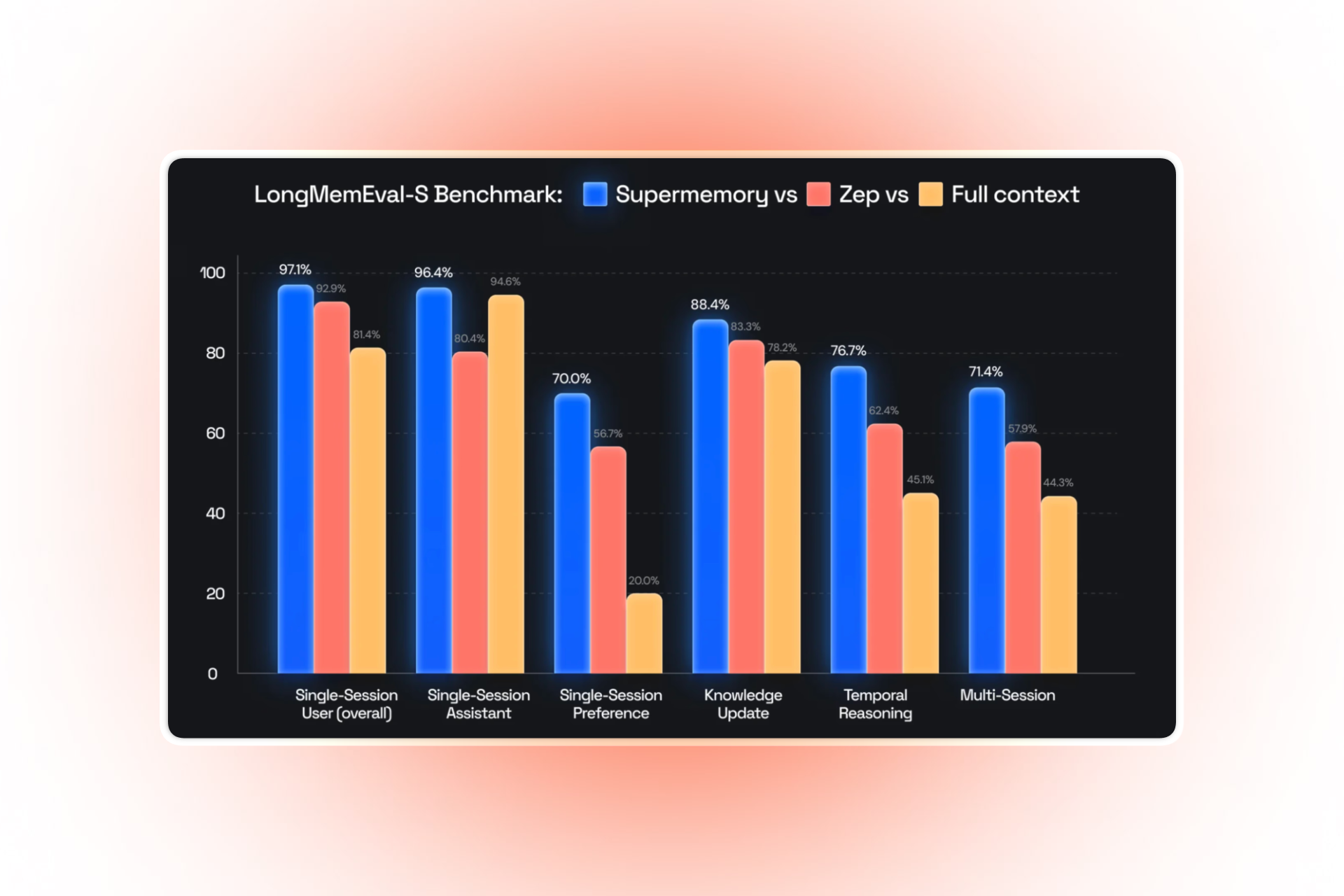

Bar chart comparing the LongMemEval-S Benchmark scores for three memory systems: Supermemory (blue), Zep (red), and Full context (yellow). Results cover six categories—Single-Session User (overall), Single-Session Assistant, Single-Session Preference, Knowledge Update, Temporal Reasoning, and Multi-Session. Supermemory consistently achieves the highest accuracy across all tasks.

Why it matters

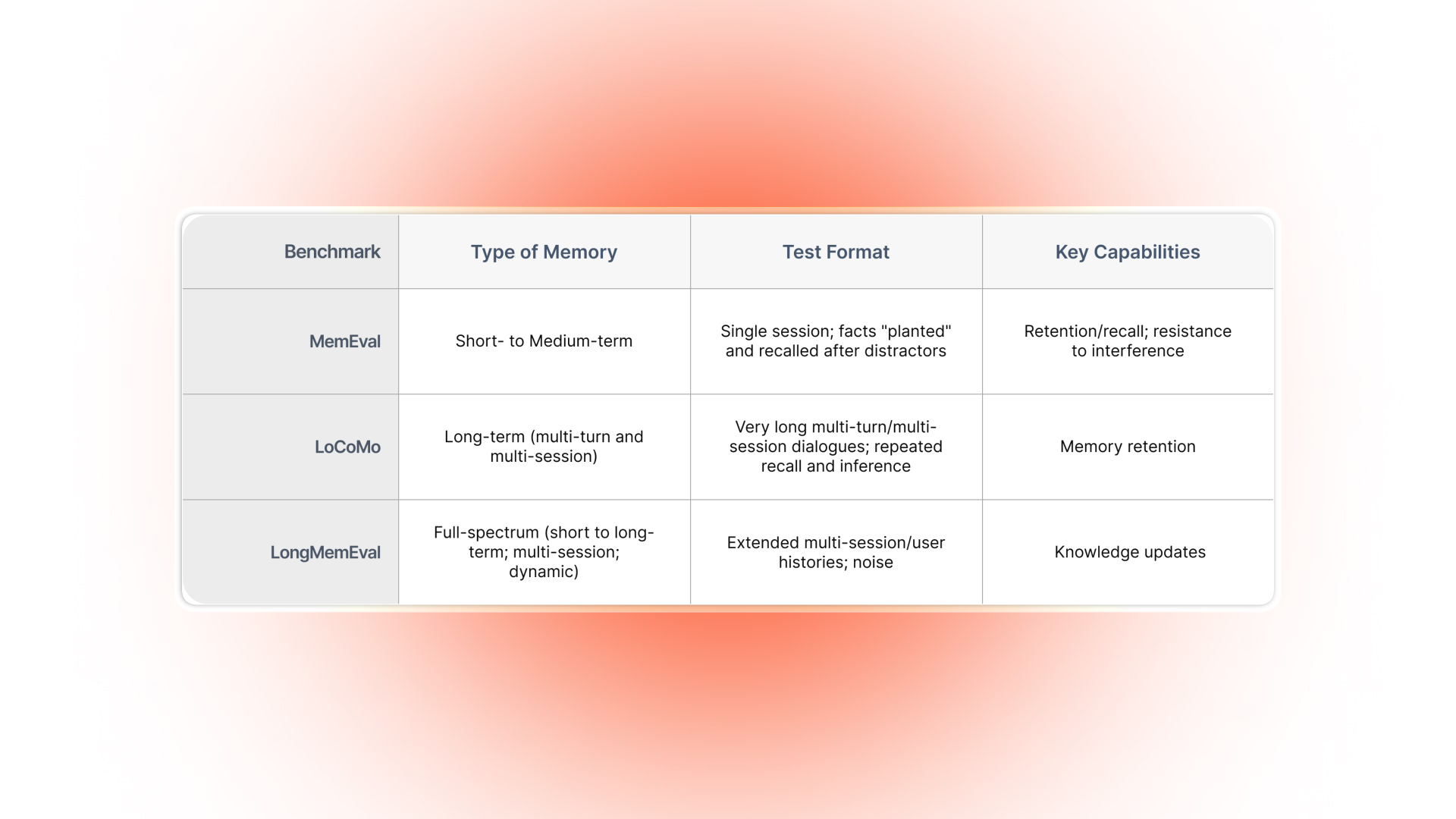

While classic benchmarks like MemEval (which emphasizes short-range retention and recall within limited contexts) and LoCoMo (which focuses on long-range conversational memory across multiple sessions) each probe critical aspects of AI memory, LongMemEval more closely simulates real-world chaos: it introduces noise, distractors, evolving knowledge, and dynamic, multi-layered interaction.

LongMemEval uniquely exposes 30–60% performance drops in current commercial LLMs and long-context models on these sustained, realistic tasks. This drives innovations like Supermemory's state-of-the-art results (e.g., 81.95% on temporal reasoning), marking a leap toward reliable, agentic AI capable of coherent, personalized long-term conversations.

By setting a new gold standard, LongMemEval guides the development of next-generation scalable memory systems, surpassing the capabilities of both basic retrieval-augmented generation (RAG) and earlier benchmarks.

Comparison table of memory evaluation benchmarks for AI: MemEval (short- to medium-term, single session, tests recall), LoCoMo (long-term, multi-turn/session, tests memory retention), and LongMemEval (full-spectrum, dynamic, tests knowledge updates).

If you want to learn more about LongMemEval benchmark dive more into the LongMemEval: Benchmarking Chat Assistants on Long-Term Interactive Memory paper or about the SuperMemory performance on LongMemEval dive more into SuperMemory Research page.

Our approach at Invent

We build stateful infrastructure for AI assistants, chatbots, and LLM agents, blending prioritizing relevance via scoring, capping capacity at efficient limits, and reconciling data automatically, with advanced semantic retrieval. We layer short-term session context, long-term user facts, and cross-session blending, all secured with encryption and user controls for seamless scalability. Actions integrate external data (CRMs, workflows) to make memory active, not passive, powering decisions and automation.

Key challenges

- Scalability strains as memories grow, demanding smart forgetting and compaction to avoid bloat.

- Relevance hinges on semantic reasoning beyond keywords, grasping intent, ambiguity, and context, while privacy requires ironclad controls amid rising regulations.

- Measuring quality lacks mature benchmarks for continuity or satisfaction, and ecosystem standards lag, hindering interoperability.

17 questions you should ask yourself to understand AI memory for your CX journey

Use these questions to audit your current CX tech stack, discover memory gaps, and inform conversations with vendors or IT teams.

What types of memory does the platform support?

How does the system personalize responses across sessions and channels?

Can the memory handle knowledge updates and contradictions?

Does the solution sync memory across web, app, and human-assisted channels?

What privacy and data protection controls are built in?

How does it comply with regulations like SOC 2, GDPR, or HIPAA?

Is there a user-facing way for customers to view or correct their stored ‘profile’ or preferences?

How is memory scored, summarized, or pruned (“smart forgetting”)?

How does memory affect bot performance, latency, and scale?

Can the platform trigger actions or workflows based on stored context?

How does the system ensure relevance and avoid hallucinated ‘memories’?

Does it support multimodal and multilingual memory (text, voice, images, other languages)?

Are there transparent logs or audit trails for memory changes?

Can users or admins set memory limits or customize retention periods?

What are the pricing tiers and what do they include (memory size, API calls, compliance tools)?

Is there enterprise support, SLAs, and upgrade pathways for scaling CX?

What’s the real-world business impact for my business: increased CSAT, NPS, retention, or sales?

FAQs

How reliable is AI memory across long sessions?

It resets per-chat in many systems to stay fresh but persists key facts globally; advanced setups like Mem0, Supermemory and Invent blend them for continuity without overload.

What about privacy risks with stored data?

Encryption, SOC 2 compliance, and manual delete controls protect info, users toggle features anytime, prioritizing consent over persistence.

Can AI forget irrelevant details automatically?

Yes, scoring systems prioritize high-value items (preferences, goals, facts), merging or summarizing others when limits hit, mimicking human brain.

How does memory handle conflicting info?

Continuous reconciliation scans for duplicates or contradictions, updating based on recency and relevance during sessions.

What's the biggest hurdle for production use?

Semantic retrieval for vague queries and benchmarking long-term recall, emerging tools like ours address this for real-world CX gains.

How to integrate AI memory features into my workflow?

Integration depends on the platform. Many leading AI memory solutions provide APIs, SDKs, and plug-ins that connect to your CRM, ticketing, and workflow tools. Start by identifying key touchpoints where memory adds value (like customer history, preferences, or open issues). Map these to your workflows, use provided libraries or APIs, and configure permission/consent settings. For low-code environments, some vendors offer no-code connectors or prebuilt Zapier integrations.

What are the pricing options for popular AI memory platforms?

Pricing structures vary but typically include:

- Subscription tiers: Based on number of users, conversations, or stored memory volume.

- Usage-based: Pay-per-session or by API call.

- Enterprise options: Custom quotes for advanced features (compliance, encryption, API access, premium support).

- Free trials or free plans: Many platforms offer limited free trials or tiers for evaluation. Look for estimated rates on major platforms like Invent, Mem0, and Supermemory.

Which AI memory apps sync across multiple devices seamlessly?

Most leading AI memory platforms support cloud-based storage and account-based data, allowing you to access persistent memory across web, mobile apps, and desktop clients. Look for features like:

- Real-time sync: Updates reflected on all logged-in devices instantly.

- Cross-platform SDKs: For custom apps or integrations.

- User authentication: Ensures secure, personalized context regardless of device.

- Top vendors, including Mem0, and Supermemory, explicitly advertise multi-device and cross-channel syncing as core capabilities.

Are there subscription plans for AI memory services?

Yes, most services offer tiered subscription models for individuals, businesses, and enterprises. Plans typically vary by:

- Features: Session memory limits, global memory size, privacy controls, API access, collaborative tools.

- Support: Priority support, onboarding, and custom integrations for higher tiers.

- Compliance options: SOC 2, GDPR, and custom compliance for regulated industries. Monthly and annual billing options are standard, with discounts for longer commitments and piloting plans for evaluation.

Conclusion

As customer expectations rise, AI memory is rapidly moving from a novelty to a necessity for delivering world-class customer experience. By elevating chatbots and assistants from simple responders to trusted, context-aware partners, memory drives deeper personalization, accelerates resolutions, and boosts customer retention.

Forward-thinking CX leaders are already embracing platforms that blend session context, long-term memory, and strong privacy controls to transform interactions, improve conversational AI, and stay ahead of the curve.

Keeping pace with these advances is about creating experiences that customers remember and return for.

Ready to build customer relationships that last?

Start creating your own with Invent now.